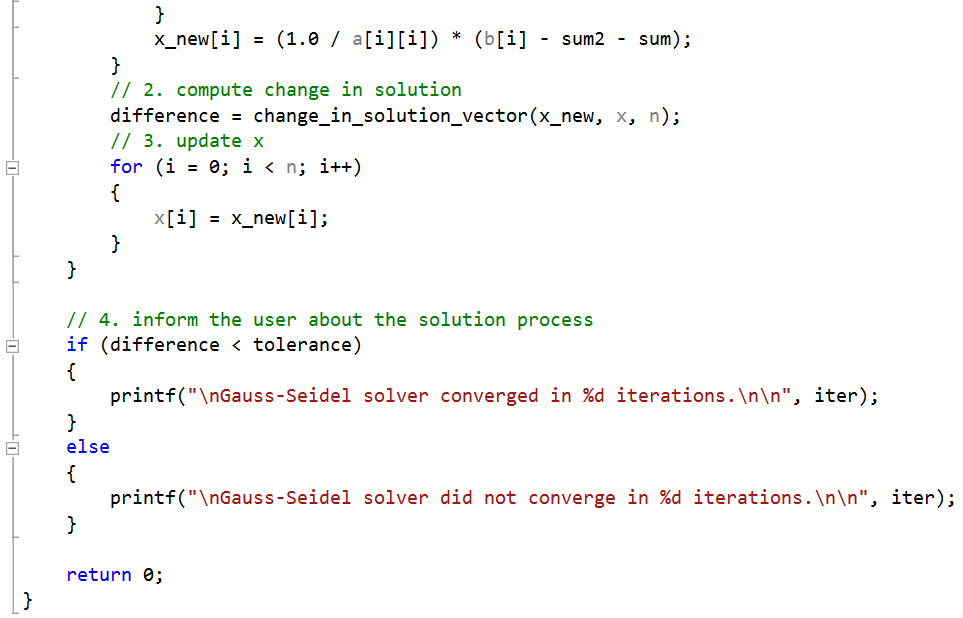

As a final note, most “production” multigrid solvers use additional levels of refinement. We can clearly see the benefit of the Multigrid scheme: the number of iterations on the fine mesh, which contains more data points, is greatly reduced! The effect is even more pronounced in 2D and 3D where the number of unknowns reduces by a factor of 4 or 8 between each grid level. The Gauss-Seidel data on left shows the solution every 250 iterations, while the Multigrid plot on right plots data after every 25 iterations on the fine level. Figure 6 below plots the evolution of the solution \(\phi^n\) for the two solvers. These values were arrived at by trial and error so there may be better combinations out there. This result was obtained using only a single iteration to update \((\phi^n)^*\) (so only a single pass), and 50 iterations to approximate \(\epsilon_c\). Comparing operation counts, we see that the MG needs a factor of 6.9 fewer operations that Gauss Seidel (1.6 million vs. Some of these operations could be optimized out but I kept them here for clarity. We additionally have operations to compute the residue and interpolate between meshes. This same GS scheme is used in the Multigrid solver to iterate \((\phi^n)^*\) and \(\epsilon_c\), with the caveat that the second iteration is on (ni/2) nodes. Hence, the total number of iterations for the GS solver is (num_its*ni*5 + (num_its/R_freq)*5). This also involved 5 operations but these occur only every R_freq iterations. R_i = (phi - 2*phi + phi )/ (dz*dz ) - b #compute residue to check for convergence if (it%R_freq = 0 ):

Using this scheme, we have 5 operations per each node per each Gauss-Seidel iteration, I mainly only counted stand-alone multiplications or accesses to the solution vector. This is bit of a fuzzy math, but I tried to stay consistent between the two solvers. Thus, it’s better to compare the number of operations.

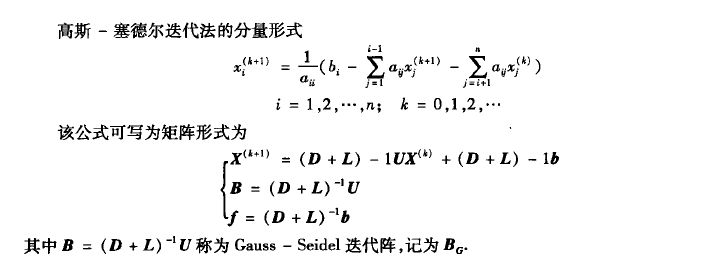

The GS solver requires 17,500 iterations to converge, while the MG solver needs only 93 loops of the above algorithm to reach the same tolerance! But comparing the number of iterations is a bit like comparing apples to oranges since each MG iteration is much more complicated. This algorithm is illustrated with the attached Python code. $$ \frac = (\phi_f^n)^* – \epsilon_f $$ħ) We then return to step 1 and continue until the norm of the residue vector \(R^n\) reaches some tolerance value.

Previously on this blog we saw how to obtain a numerical approximation to the Poisson’s equation \(\nabla^2\phi =b \) using the Finite Difference or Finite Volume method.

0 kommentar(er)

0 kommentar(er)